Robots Catching Tennis Balls

Highlights

Insights

1. AI’s true potential is unlocked when it is used to create previously impossible outcomes.

2. LLMs tuned on neuroscience literature may surpass experts in predicting experimental outcomes.

News

1. Ai2 OpenScholar is a retrieval-augmented language model designed to synthesize scientific literature.

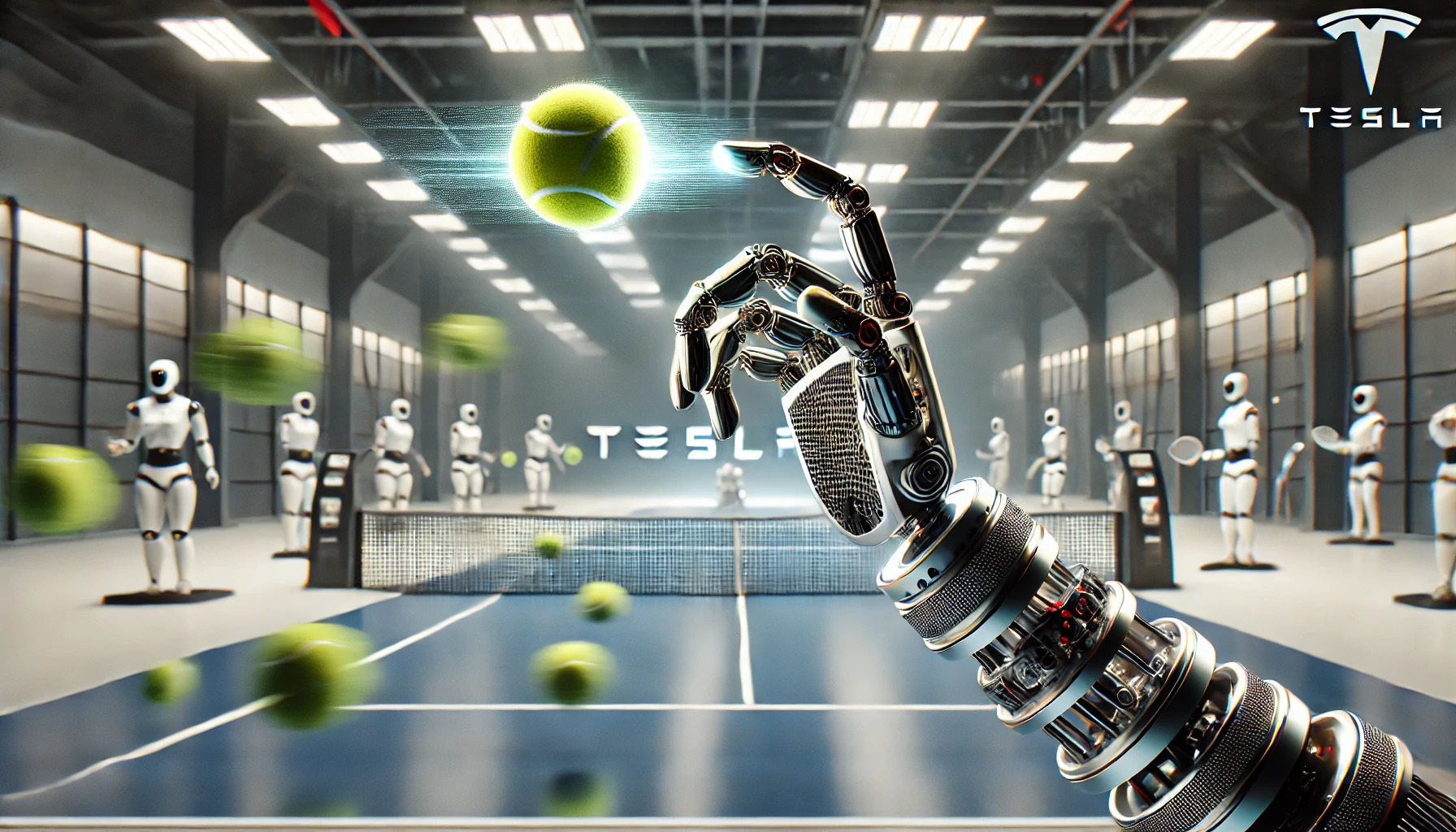

2. Tesla’s Optimus robot can now catch high-speed tennis balls thanks to a new hand upgrade.

3. Anthropic developed the Model Context Protocol (MCP), an open standard designed to connect AI assistants with various data sources.

4. Alibaba has introduced QwQ-32B-Preview as an open competitor to OpenAI’s o1 reasoning model.

5. Nvidia’s new AI audio model, Fugatto, can synthesize entirely new sounds.

6. Luma AI has expanded its Dream Machine AI video model into a comprehensive creative platform.

7. Runway’s new image generation model, Frames, offers unprecedented stylistic control and visual fidelity.

Innovation Insights

1. LLM in creative work: The role of collaboration modality and user expertise (Management Science)

An experiment comparing two collaboration modalities—using LLMs as “ghostwriters” versus “sounding boards”—revealed that LLMs as sounding boards significantly improve ad quality for nonexperts, while using them as ghostwriters can be detrimental to experts. The study found that the ghostwriter approach leads to an anchoring effect, resulting in lower-quality ads, whereas the sounding board approach helps nonexperts produce content similar to that of experts, thereby narrowing the performance gap.

2. AI and the R&D revolution (Financial Times)

An entrepreneurial culture within R&D teams is crucial for fostering transformational innovation. Clean and well-managed data is essential for AI systems, enabling personalized product development and efficient market targeting. Generative AI acts as a co-founder or brainstorming buddy, assisting in brainstorming, market research, and product testing without replacing human roles. Technological tools like CAD-CAM software, digital twins, and 3D printing are revolutionizing the R&D process, making it more efficient and accessible. AI’s impact on the pharmaceutical industry is profound, potentially accelerating drug development by four years and reducing costs by up to 45%.

3. Large language models surpass human experts in predicting neuroscience results (Nature Human Behaviour)

Large language models trained on scientific literature can synthesize vast amounts of research to predict novel results, potentially outperforming human experts. The BrainBench benchmark was created to evaluate this, and findings show that LLMs, particularly BrainGPT tuned on neuroscience literature, surpass experts in predicting experimental outcomes. High-confidence predictions from LLMs are more likely to be correct, indicating a future where LLMs assist in making scientific discoveries across various fields.

4. Train your brain to work creatively with GenAI (Harvard Business Review)

Most people prompt generative AI based on their existing paradigms and linear thinking, similar to how they use search engines like Google. However, AI’s true potential is unlocked when it is used to create unique outcomes that wouldn’t be possible without human-machine collaboration, requiring a shift in how we think about AI’s capabilities and expected results. The article provides 12 exercises to help expand thinking around prompting GenAI, encouraging leaders to cultivate a culture where achieving the “impossible” becomes a regular challenge and accomplishment.

5. Building AI trust: The key role of explainability (McKinsey)

Explainable AI enhances transparency by clarifying how AI models function, enabling operational risk mitigation, regulatory compliance, continuous improvement, and user confidence. It supports stakeholder-specific needs through global and local explanations and requires a cross-functional, human-centered approach integrated into the AI development lifecycle. By aligning XAI practices with ethical and regulatory standards, organizations can foster adoption, improve performance, and unlock AI’s full potential for value creation while ensuring responsible and human-centric use.

AI Innovations

1. Anthropic

Anthropic’s Claude AI now offers custom styles to match users’ unique writing styles, with presets for formal, concise, and explanatory tones, and the ability to create personalized styles (The Verge).

The Model Context Protocol (MCP) is an open standard designed to connect AI assistants with various data sources, enhancing their ability to produce relevant responses by overcoming data isolation (Anthropic).

2. Alibaba

Alibaba has introduced QwQ-32B-Preview, positioning it as an ‘open’ competitor to OpenAI’s o1 reasoning model (TechCrunch).

3. Amazon

Amazon has developed a new generative AI model, Olympus, that can process images and videos (Reuters).

4. Ai2

Ai2 releases OLMo2, new open-source language models competing with Meta’s Llama (TechCrunch).

Ai2 OpenScholar is a retrieval-augmented language model designed to synthesize scientific literature, outperforming existing models in factuality, citation accuracy, and usefulness for researchers across multiple fields (Ai2).

5. Podcast

ElevenLabs has launched GenFM, a feature for creating multispeaker AI-generated podcasts, similar to Google’s NotebookLM (TechCrunch).

6. Image

Runway’s new image generation model, Frames, offers unprecedented stylistic control and visual fidelity, allowing for consistent yet creatively varied outputs (Runway).

7. Audio

Nvidia’s new AI audio model, Fugatto, can synthesize entirely new sounds by combining various audio traits and descriptions (Ars Technica).

8. Video

Luma AI has expanded its Dream Machine AI video model into a comprehensive creative platform with a new interface, mobile app, and image generation model called Luma Photon (VentureBeat).

Other Innovations

1. Robotics

Tesla’s Optimus robot can now catch high-speed tennis balls thanks to a new hand upgrade (Yahoo!).

2. Data storage

Researchers have developed a diamond-based data storage system with record-breaking density, capable of preserving information for millions of years (New Scientist).

3. Tweaking memory

Tweaking astrocytes, non-neuron brain cells, can selectively suppress or enhance specific memories, revealing their crucial role in memory storage and recall (Nature).